Data Warehouses, Data Marts, and Data Lakes

1. Data Warehouse:

- Definition: A data warehouse is a large, multi-purpose storage system designed for analytical and reporting purposes. It stores structured, historical data that has already been processed, cleaned, and organized for analysis.

- Use Case: A data warehouse is ideal when you need to store massive amounts of operational data for easy access and analysis, often from multiple systems across the organization.

- Key Features:

- Single source of truth: Data is cleansed and conformed across systems.

- Business Intelligence: Enables reporting, dashboards, and performance analytics.

2. Data Mart:

- Definition: A data mart is a subset of a data warehouse, focusing on a particular business function or department (e.g., finance, marketing, or sales).

- Use Case: Provides specialized reporting and analysis for specific teams or departments. For example, the sales team may only need data relevant to sales, not all data across the organization.

- Key Features:

- Isolated performance: Since it is smaller, a data mart can be optimized for performance in a particular business area.

- Security: Has isolated security measures based on the department’s access needs.

3. Data Lake

- Definition: A data lake is a vast storage repository that holds all forms of data (structured, semi-structured, and unstructured) in its raw format. Data lakes are tagged with metadata to allow for further analysis.

- Use Case: A data lake is suitable when you have large volumes of raw data coming in continuously and do not yet have specific predefined use cases for all of it. It is often used in predictive analytics and machine learning projects.

- Key Features:

- Flexibility: Allows you to store data without imposing structure upfront.

- Data retention: Holds all data types, even those that may be useful in the future.

- Advanced analytics: Used for more complex or forward-looking data analysis, such as data science, AI, and predictive modeling.

ETL Process (Extract, Transform, Load)

The ETL process is central to turning raw data into actionable insights:

- Extract:

- Definition: This step involves collecting data from various sources, such as operational databases, third-party APIs, flat files, or social media.

- Approaches:

- Batch Processing: Data is extracted in large chunks at scheduled intervals.

- Stream Processing: Data is continuously extracted in real-time as it is created or updated.

- Transform:

- Definition: After data is extracted, it must be cleaned, standardized, and transformed to fit the desired analysis format.

- Tasks:

- Data Cleaning: Removing duplicates, correcting errors, and filtering unnecessary data.

- Data Standardization: Making date formats, units of measurement, and other data elements consistent.

- Data Enrichment: For example, splitting a full name into first, middle, and last names.

- Business Rules: Apply custom logic that ensures the data adheres to business standards or needs.

- Load:

- Definition: Once the data is transformed, it is loaded into the data repository (such as a data warehouse, data mart, or data lake).

- Methods:

- Initial Loading: Populating the data store for the first time.

- Incremental Loading: Periodically applying updates to the data.

- Full Refresh: Overwriting the data with fresh information.

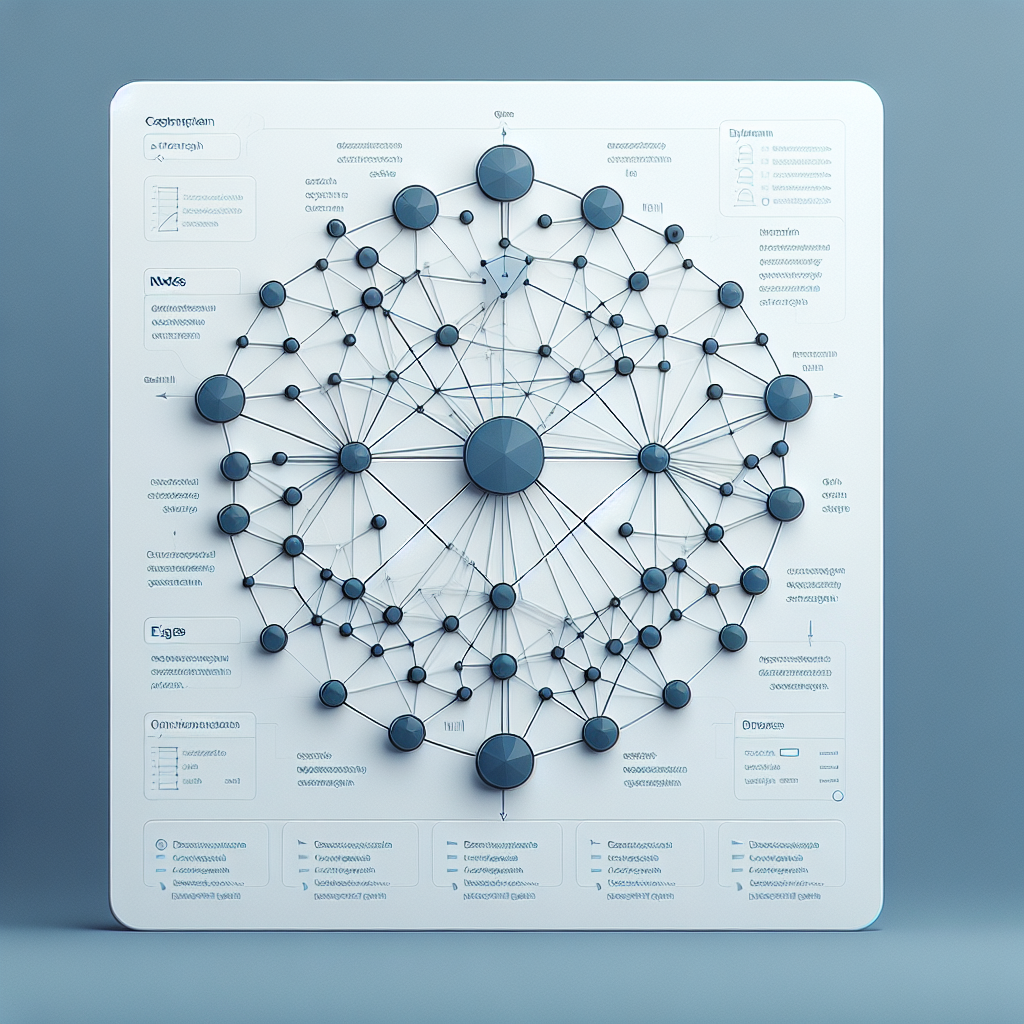

Data Pipelines vs. ETL

- Data Pipelines: A data pipeline refers to the entire process of moving data from its source to its destination, which includes extraction, transformation, and loading (ETL). It is a broader term and can include batch processing, stream processing, or a combination of both.

- ETL as Part of Data Pipelines: ETL is a specific subset of the data pipeline process, focusing only on extracting, transforming, and loading data.

- Use Cases:

- Streaming Data: Data pipelines can support real-time data processing, which is useful for continuously updated data like sensor monitoring.

- Batch Processing: Traditional data pipelines may still rely on scheduled batch processes.

Data Integration

- Definition: Data integration is the practice of combining data from different sources to provide a unified view of the data, facilitating better analytics and decision-making.

- Role in ETL & Data Pipelines:

- Data pipelines are used to move data and enable integration. ETL is a key process within data integration, as it helps in transforming data into a format that can be used for analysis.

- Modern data integration tools allow you to combine data from multiple sources (databases, APIs, cloud platforms) and ensure data quality, governance, and security.

Data Integration Tools and Platforms

Modern data integration tools are designed to support complex, large-scale data environments. Popular tools include:

- Commercial Solutions: IBM, Talend, SAP, Oracle, Microsoft, and Qlik.

- Open-Source Solutions: Dell Boomi, Jitterbit, SnapLogic.

- Cloud-Based iPaaS (Integration Platform as a Service): Adeptia Integration Suite, Google Cloud’s Integration services, and Informatica.