Computer Vision

CV is around since 1950 e.g Bar code scanner.

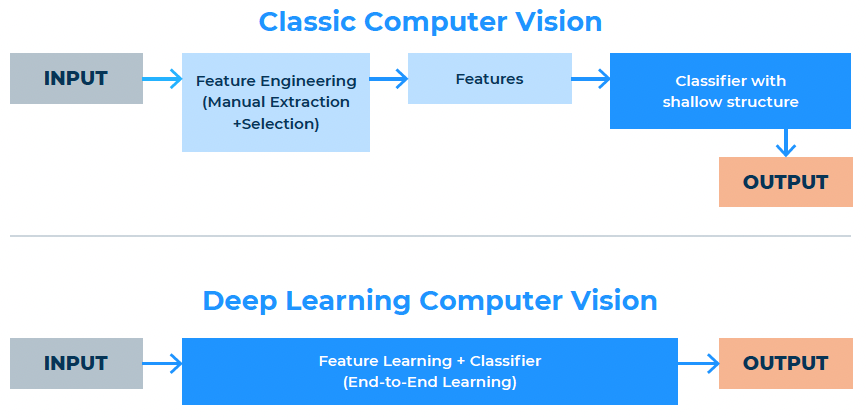

Two types of Computer Vision Algorithm –

- Classical CV: Relies on prebuilt libraries of features.It collect images and label them according to similar characteristics & group them in dataset or library of features. Example : Sorting good and bad tomatoes.

Pros – Algorithm work best for object detection and work fast with great accuracy.

Cons – Doesn’t provide sufficient power, deep learning CV methods are best

- Deep Learning CV: Generates it’s own library by using CNN (Convolution Neural N/w).

CV Applicatons

- Image Classification : e.g dog or cat image

- Image Segmentation : Partitioned an image into multiple regions which can be examined/manipulated. Eg Zoom make the background blurred.

- Object Detection : Advanced object detection can recognise multiple objects in one image. Is there pedestrian in this image or not. Is there grocery in the street or not.

- Object Tacking: Monitors movement. Where the object going. Is car travelling too fast.

- Image Generation: Generate from 2D to 3D. This is how Google map generate 3D map when moving from 2D

- Edge Detection: Identify outside edge of an image to indentify what is there in the image,

- Face Detection: How many faces in the image. Focus camera on face.

- Facial Recognition: One step beyond face detection. Can identify who is there in the photo.

- OCR (Optical Character Recognition): Read handwritten/scanned/printed document.

- Pattern Detection: QR code

- Feature Matching

10 Use cases of CV adding substantial value to companies today

- Skanska: Its one of the largest construction company in the world. Uses CV for work replacement. Their workers walk an average 6 miles/day to get the right materials, tools & equipment to the right place. Using CV analysis Skanska placed equipment/tool etc. in the right place. Which reduces their worker walk 2 miles less per day. Improved productivity +1hr everyday. Roughly 12% increased.

- Tesla: Uses CV in their self driving car. The car uses multiple scanners to analyse their surroundings.

- Harvest Croo Robotics: CV for automatic crop harvesting. It identifies crop ready for harvesting and harvest those.

- Ebay: CV for image search. Consumer worldwide spend $6 trillion dollars on the web every year. Like something take a picture and upload in eBay. It will shows hundreds of products matching your image. Ebay claims 50% of all sales come from mobile (vs 40% in 2016)

- AI Cure: Uses CV for medication adherence. Approximately half of all patients don’t take their medicine as prescribed. AI Cure claims 95% adherence on initial samples and significantly helped most vulnerable groups.

- Osprey: uses CV for oil well monitoring. Helps to monitor oil well in the remote location. Reduce visit to all oil well, rather visit malfunctioning oil well. Shell invested several millions in Osprey Startup.

- Roaf: CV uses for waste sorting. We generate over 2 billion tons of waste every year and recycle only 30% of recycable waste. $11 billion dollar recycable packaging thrown away in US alone. Bad for environment. Roaf’s solution can sort – 40 tons of waste per hour. Serves 190,000 inhabitants. Upto 97% accurate in sorting. Their target to reach 70% reuse and recycling by 2030.

- Swiss Federal Institute of Technology : CV for stroke recovery. 15% people encounter and caused serious long term disability in developed countries. Recovery expensive, it cost $11000 dollar in the first year in US. The CV solutions initial testing shows full recovery effectiveness.

- Cortexica: CV to monitor workplace safety. An average industrial business experience 27 injuries lead to days off every year. Almost of half of it due to human error e.g 30% of worker not wearing right protective gear at the right time. The solution claim to prevent 60k accidents casing 122 pounds every year in UK alone.

- Tomra: CV for automatic ore sorting. Mining industries consumes 3% of the world’s energy due to precious materials being present in ore in very small quantities. e.g average gold bearing ore contains about 5g of gold per ton. The CV solution sort ore from waste and reduce energy consumption by 15%. Reduction of consumed water by 4 cubic meter per tone of ore.

Deep Learning

DL is a family of ML methods based on Artificial Neural Networks. In DL there is no pre-defined framework. In the case of non-DL methods there are certain frameworks that we tried to fit on the data to explain the patterns that we are seeing. If they work then great, else we try something else, until we find something that fits the data well and helps us extract those insights.

Cons: DL requires a lot of data (thousands, hundreds of thousands, or even millions), much more data than other algorithms to learn. On the flip side, the benefits that we get are incredible.

DL Use Cases

- Google AI : DL uses for Cancer detection. Cancer is leading cause of death (10 million per year) after cardiovascular disease. Pathologists disagree on the diagnosis in over 50% of cases of breast & prostate cancer. Google AI identify metastasis with 89% accuracy compared to 73% for a trained pathologist with unlimited time. Although unlikely to replace them immediately but the solution help pathologists improve their diagnosis of cancer.

- Spotify: DL recommendation engine. The online streaming service accounts for 75% of the music industry revenue, with 3.4 billion dollars of revenue in 2018. The streaming services stopped relying in organic searches long ago and recommendations based on previous purchases are classic way of driving business. With New recommendation Spotify increased active users from 75 to 100 millions.

- GoldSpot Discoveries: DL for mineral exploration. Earth’s non-renewable natural resources are getting harder and harder to find. Company need to dig deeper and wider area. The DL analyse geological data to predictive method for finding gold deposits. Identified 86% of existing gold deposits in Abitibi, Quebec. Despite working off data from 4% of the total surface area.

- Digital Domain: DL for VFX (Visual Effects). In block buster movies, VFX represent 20-50% of the movie budget. This gave director unprecedented insights into how the movie will look during actual shooting. e.g Thanos

- Ayasdi: DL for anti-money laundering. Money laundering transactions total approximately 1-2 trillion dollars annually, an incredible 2-5% of the global GDP. The unnamed bank reduced investigative volume by 20% due to DL solution.

- Deep Instinct: DL for cybersecurity. Cybercrime cost the global economy $600 billion dollar every year. Unnamed Fortune 500 company installed the solution. Within 1st week of deployment the algorithm found 12 infected endpoints and detected 10% of their devices infected.

- Doxel: DL for productivity tracking. Around 80% of construction projects are delivered over budget, with typical delivery times 20 months behind the schedule. Because of project managers instant visibility of progress. Most progress tracking is still done with physical measurement visual inspection and clipboards. The solution take pic from drone and detect deviations from the planned schedule and prompt the project team to act before delay start to pile up. In one project labour productivity increased by 38% and project delivered 11% under budget.

- Amazon Rekognition: DL for facial recognition. Although facial recognition is currently under scrutiny due to allegations of bias and concerns over individual safety. The technology still in infant stages and experiencing fast development. Rekognition was employed to find matches with 80%,95% or even higher similarity. Allowed law enforcement officers to quickly narrow their search and follow up on leads.

- Zestimate: DL for real estate prices.

- Zest finance: DL for loan approval. The solution analyse customer credit score and help top US auto lender to cut its losses by 23% annually.

If DL algorithms learn on their own, then why do we need data scientists or ML engineers to build them? And why are experts in this space so expensive?

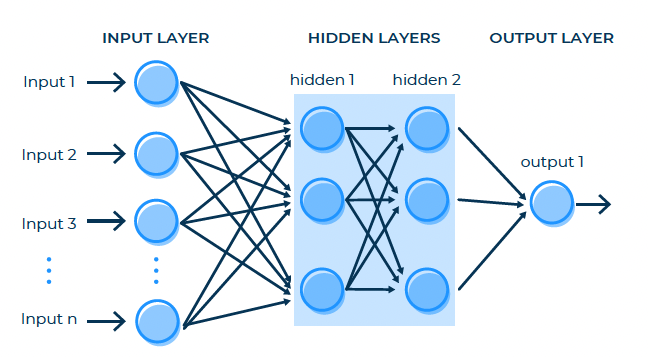

Here we see a basic input-output type of neural network: It has one input layer with 4 neurons and one output layer with 1 neuron. Now what we can do is add another layer in-between the input and output layers. This layer is called a “hidden” layer and in this case it has 6 neurons. We can add another hidden layer, and another. Each one of these neural networks – whether it has zero hidden layers, or one, or two or 10 – is a valid neural network. Moreover, we can also change the number of neurons in each layer. For example, the first hidden layer might have 8 neurons, while the 2nd hidden layer might have only 2 creating and then back to 6 in the 3rd hidden layer – forming a sort of a bottle-neck shape. That is also a valid neural network. These different ways that neural networks can be constructed are called architectures.

Every purpose, every application, every challenge will have a different architecture that serves it best. Finding the right neural network architecture is actually a very creative process. And that’s why expert ML engineers and data scientists who build DL algorithms are in such high demand and are so expensive to have on your team – because they are doing highly creative work which requires their unique touch and input.

Reinforcement Learning

There are three main groups of algorithms in ML:

- Unsupervised Learning (UL): used for discovery of new patterns. For example, clustering of customers into groups based on their similarities. The core principle here is that the resulting groups did not exist prior, but rather are suggested by the machine in the process.

- Supervised Learning (SL): Need to teach a machine to search and identify patterns that we have seen before. For example, classification of pictures of dogs and cats into the two categories “dogs” and “cats”. First, we show the algorithm thousands of already labelled images so it can extract features that are essential to dogs and features that are essential to cats. After this the algorithm will be able to categorise new images as either of “dogs” or of “cats”. The difference of this approach to unsupervised learning is that we have to first provide the labelled data for the algorithm to learn.

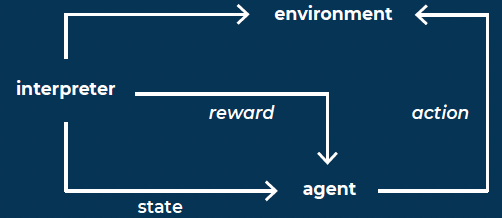

- RL: An imaginary agent is being presented with a problem and is being rewarded with a “+1” for finding a solution to the problem or punished with a “-1” for not finding a solution. Unlike with Supervised Learning, the agent is not given instructions on how to perform the task. Instead, it performs random actions and interacts with its environment. It learns through trial and error which actions are good and which actions are bad.

Pros of RL

- Greater scope of application than any form of supervised learning. Doesn’t require large number of datasets.

- Innovative unlike SL, which only imitate patterns in the original datasets. In SL algorithms can do the task same or better than master. But can’t learn completely new approach to solve a problem. But RL can come up with entirely new solutions.

- Biased Resistance: RL is resistant to bias in the provided labeled dataset.

- Learn online in real time.

- Goal oriented: Can be used for sequence of actions. E.g robot playing soccer, car is reaching the destination.

- Adaptable: Like SL, RL doesn’t require retraining. It adapt to new environments automatically.

RL in Marketing

RL will revolutionise the way we do marketing.

Use Cases

- Creating personalised recommendations. e.g Netflix

- Optimising advertising budget. Understanding which advertises bring ROI is incredibly difficult task.

- Selecting best content for Advertisement. RL blows AB testing out of the flow. As we have to wait until the end of test to see result. RL works on fly. Can find most optimal content much quicker and start showing it sooner.

- Increasing customer lifetime value. The comprehensive approach nurtures the customer and maximise the life time value.

- Predicting customer responses to price plan changes. Using inverse RL we can observe consumer behaviour – do they want to save money. Or buy high end brand, quality to price ratio, level of service, what feature most desirable.

10 Use Cases of RL

- Google AlphaGo: Learn the Go game and become unbeatable.

- Google Energy Management: Google Deepmind neural n/w trained on different operation scenarios and parameters. It reduced amount of energy for cooling their data centres by upto 40%

- Decision Service: Advertising. Achieved CTR (Click Through Rate) improvement of 25-30%. Revenue lift of 18%

- Trendyol: Email advertising. Email automation tool that distinguishes which messages will be most relevant to which customers. 30% lift in CTR, 60% lift in response rates, 130% lift in conversion rates.

- Alibaba: Advertisement display bidding. Developed a system how likely a customer going to click on an add based on their preference, content of the add itself. Return of investment increased by 240% without increasing ad budget.

- ELecta: Energy management. Scientists in Electa optimise hot water control system. Applying the technology to houses reduced energy consumption by 20% without impacting comfort.

- Fanuc/Tesla: Manufacturing and Robotics. Robot quickly learns to perform new tasks, including sorting products or delivering them. Tesla implemented 160 robots in the plant. Which perform new tasks with 90% accuracy overnight.

- Inventory Management.

- Cambridge University: Healthcare. AI entered healthcare as supervised learning to replicate work of medical professionals. Researchers developed RL algorithms that improves treatment policies for patients with sepsis.

- Self Driving car.

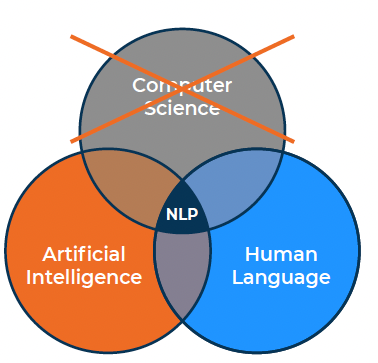

NLP (Natural Language Processing)

NLP used in applications ranging from VPA (Virtual Personal Assistant) and Automatic Translation to Legal Document reviews and chatbots.

Structured data

Data that has a pre-defined specific format. For example, spreadsheets, tables, databases.

Unstructured data

Data that doesn’t follow a pre-defined specific format. For example, emails, chats, blogs, books, audio files, video files, images.

This data, like all data, contains power: Power to know your customers better, power to increase efficiency and the power to scale. However, because of its unstructured format, it is much harder to leverage. And that is why NLP is an exploding field right now. Businesses are in a race to be the first in their industry to unlock the potential of their unstructured data. Based on various sources, unstructured data accounts for 70-90% of data in the world. Most of this data contains text in some form – whether written or audio.

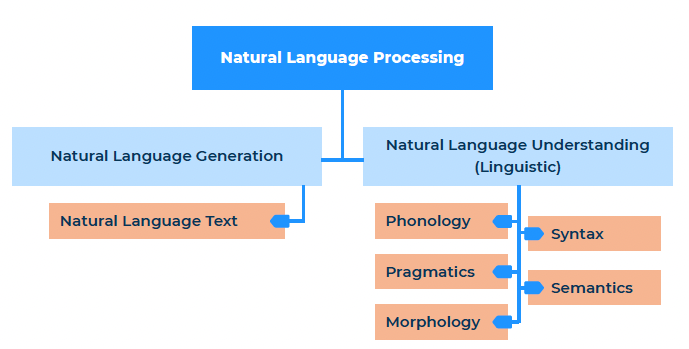

There are two parts to NLP:

- NLU: Natural Language Understanding.

refers to mapping the given input from Natural Language into formal representation and analysing it. If the input is in the form of audio, then Speech Recognition is applied first to convert it into text. Then the hard part starts – interpreting the meaning from the text. Human language is complex, some words, for example, “bank” or “leaves” can carry different meaning depending on the context they are in. Once the meaning is extracted categorise the input and come up with an appropriate action or response.

- NLG: Natural Language Generation.

is the process of producing meaningful phrases and sentences in the form of natural language from some internal representation. NLG is generally much easier than NLU since when we know the meaning that needs to be expressed, there are certain rules of language such as syntax and semantics that need to be followed in order to create an appropriate sentence. If the text needs to be put into audio, like in the case of Siri or Alexa, then Speech Generation comes in, but this is not always required.

Application

- Sentiment analysis

- Chatbot

- Speech Recognition

- Machine translation

- Autocompleting Text

- Spell Check

- Keyword Search

- Advertisement Matching

- Information Extraction

- Spam Detection

- Text Generation

- Automatic Summarisation

- Q&A

- Image Captioning

- Video Captioning (Transcript)

RPA (Robotic Process Automation)

Easily Programmable s/w to take care or handle highly repetitive, high-volume tasks. Industries that most commonly use RPA – banking, insurance, financial, telecommunication, IT, retail, healthcare.

According to Forrester Research RPA stands to disrupt 230 million jobs globally.

Risks in RPA

Ernst & Young: Reported in 2017 that 30-50% RPA projects initially fail.

note: The report is on 2017 so need to check current data. The failure %age should be much lower now.

Risk-1: Look at RPA project from IT stand point rather than business stand point. Tip: Business leaders are best positioned to direct a virtual workforce

Risk-2: Targeting wrong processes. Repetitive simple process examples automated with RPA e.g Customer Support Chatbots, Email Marketing Campaigns, Invoice Processing, Prayroll Processing, Price Comparison, Sales orders, Recruitment and more.

RPA not designed for complex processes.

Risk-3: Change Management. Human are free to do creative and high level task. Employee should not look at it as threat.

Risk-4: Automating too much.

Risk-5: Trusting only the skills not the experience of RPA consultant.