What is the Large Language Model (LLM)?

LLM is a Deep learning algorithm. LLM largely represent a class of Deep Learning architecture called Transformer models/networks. The transformer model is a neural network that learns context and meaning by tracking relationships in sequence data, like the words in the sentence.

Two key innovations make transformers particularly adept for large language models: positional encodings and self-attention.

Positional encoding embeds the order of which the input occurs within a given sequence. Essentially, instead of feeding words within a sentence sequentially into the neural network, thanks to positional encoding, the words can be fed in non-sequentially.

Self-attention assigns a weight to each part of the input data while processing it. This weight signifies the importance of that input in context to the rest of the input. In other words, models no longer have to dedicate the same attention to all inputs and can focus on the parts of the input that actually matter. This representation of what parts of the input the neural network needs to pay attention to is learnt over time as the model sifts and analyzes mountains of data.

These two techniques in conjunction allow for analyzing the subtle ways and contexts in which distinct elements influence and relate to each other over long distances, non-sequentially.

The ability to process data non-sequentially enables the decomposition of the complex problem into multiple, smaller, simultaneous computations. Naturally, GPUs are well suited to solve these types of problems in parallel, allowing for large-scale processing of large-scale unlabelled datasets and enormous transformer networks.

LLM is represented by two files in a hypothetical directory – one (c/python/any) programming file and a parameter file. Let’s consider the LLama-2-70B LLM model (70 billion parameters model) released by Meta AI. Probably it’s the most powerful open weights model available in the market. The weights, architecture and paper released by Meta, which anyone can refer to & work with this model. There are many models example CHAT GPT model where architecture is not released. One can only interact with such a model using their web interface.

In the LLama-2-70B, each parameter is of 2 bytes (i.e. datatype float 16 numbers), that’s how 70B parameters size is 140GB. The neural n/w consists of this parameter file. We also need a programming file which can run this parameter file. It’s a self-contained model which only requires downloading in our Macbook, compiling the c code and pointing it to the parameter file. No need to stay connected to the internet.

Usually, we use the LLama 7B parameter model, as the 70B parameter model will run 10 times slower on our Mac machine.

Many organizations use domain-specific data to build their custom model. The custom models are smaller in size, more efficient and faster than general-purpose LLMs. e.g. BloombergGPT has 50 billion parameters and is targeted at financial applications.

How to obtain the parameters?

Creating a parameter file requires model training, which is an intensive, time-consuming and costly process. Model training, we can imagine the compression of a good chunk of internet data into a zip file with a compression ratio of almost 10 times. It’s not a zip file actually, as zip file is lossless compression but this is lossy compression. Model training is different from model inference. Model inference is just running it on a MacBook. Once we have the parameter file running it very cheap.

As Llama is an open architecture model, Meta released data about its training process. All these numbers will be 10 times for Chat GPT, Bard etc.

What is neural n/w?

It tries to feed the next word in the sequence.

Steps to create a new AI model

A five-stage workflow for creating AI models using foundation models.

Stage 1: Prepare the data

- Gather a lot of data, potentially petabytes of data, from various sources, including open-source data and proprietary data.

- Process the data by categorizing it (e.g., which language is used), filtering out unwanted content (e.g., hate speech), and removing duplicates.

- The output of this stage is a “base data pile” that is tagged and versioned for tracking purposes.

Stage 2: Train the model

- Choose a foundation model that matches your use case (e.g., a generative model for chatbots, or an encoder-only model for classification).

- Tokenize the data pile, which involves converting the data into tokens that the foundation model can understand.

- A data pile could result in trillions of tokens.

- Train the model on the tokenized data. This can be a time-consuming process, taking months for large models with many GPUs.

Stage 3: Validate the model

- Once training is finished, benchmark the model to assess its performance against a set of criteria.

- Create a model card that summarizes the model’s training process and benchmark scores.

- Up to stage 3, all steps are performed by a Data Scientist.

Stage 4: Tune the model

- This stage involves fine-tuning the model by providing additional data or prompts to improve its performance.

- This stage is typically done by application developers who don’t need to be AI experts.

- This stage is quicker (a couple of hours)

Stage 5: Deploy the model

- The model can be deployed as a service on a public cloud or embedded into an application.

- The model can be continuously improved and iterated over time.

Why Are There So Many Foundation Models?

A foundation model is a large-scale neural network trained on a vast amount of data. It serves as a base or foundation for a multitude of applications. A foundation model can apply information that’s learned about one situation to a different situation it was not trained on, and this is called transfer learning.

For example, NASA has a lot of earth science data (~70PB this number is expected to be ~300PB by 2030), which can be used to make insights about climate change. Foundation models can help automate the tedious process of annotating features in the satellite images, by extracting the structure of the images, so that fewer label examples are needed. The flood and wildfire prediction model can be redeployed for tasks like tracking deforestation or predicting crop yields.

The ability of the foundation model to generate text for a wide variety of purposes without much instruction or training is called zero-shot learning. Different variations of this capability include one-shot or few-shot learning. Foundation models can be customized using several techniques to achieve higher accuracy. Some techniques include prompt tuning, fine-tuning, and adapters.

Huggingface has a large collection of foundation models. NASA & IBM are working on an AI foundation model for Earth Observation is called “IBM NASA Geospatial Model”. It’s an open-sourced model available in Huggingface.

What Are The Different Classes Of LLM?

Several classes of large language models are suited for different types of use cases:

- Encoder only: These models are typically suited for tasks that can understand language, such as classification and sentiment analysis. Examples of encoder-only models include BERT (Bidirectional Encoder Representations from Transformers).

- Decoder only: This class of models is extremely good at generating language and content. Some use cases include story writing and blog generation. Examples of decoder-only architectures include GPT-3 (Generative Pretrained Transformer 3).

- Encoder-decoder: These models combine the encoder and decoder components of the transformer architecture to both understand and generate content. Some use cases where this architecture shines include translation and summarization. Examples of encoder-decoder architectures include T5 (Text-to-Text Transformer).

What’s a token?

In the context of Large Language Models (LLMs), the term “token” refers to a chunk of text that the model reads or generates.

A token is typically not a word; it could be a smaller unit, like a character or a part of a word, or a larger one like a whole phrase.

In the GPT architecture, a sequence of tokens serves as the input and/or output during both training and inference (the stage where the model generates text based on a given prompt).

For example, in the sentence “Hello, world!”, the tokens might be [“Hello”, “,”, “ world”, “!”] depending on how the tokenization is performed.

What’s tokenization?

Tokenization is the process of splitting the input and output texts into smaller units that can be processed by the LLM AI models. Tokens can be words, characters, subwords, or symbols, depending on the type and the size of the model.

Tokenization can help the model to handle different languages, vocabularies, and formats, and to reduce the computational and memory costs. Tokenization can also affect the quality and the diversity of the generated texts, by influencing the meaning and the context of the tokens. Tokenization can be done using different methods, such as rule-based, statistical, or neural, depending on the complexity and the variability of the texts.

What’s the tokenization method of GPT-4?

OpenAI uses a subword tokenization method called “Byte-Pair Encoding (BPE)” for its GPT-based models. BPE is a method that merges the most frequently occurring pairs of characters or bytes into a single token, until a certain number of tokens or a vocabulary size is reached. BPE can help the model to handle rare or unseen words, and to create more compact and consistent representations of the texts.

Try tokenizer on OpenAI: https://platform.openai.com/tokenizer

A helpful rule of thumb is that one token generally corresponds to ~4 characters of text for common English text. This translates to roughly ¾ of a word (so 100 tokens ~= 75 words).

How about Llama-2 tokenization?

The LLaMA tokenizer is a BPE model based on sentencepiece.

It has a vocabulary size of 32000 different tokens, and a context window of 8192 tokens. See below for dimensions of Llama-2 token embedding and output tensors:

tensor 0: token_embd.weight q4_0 [ 8192, 32000, 1, 1 ]

tensor 1: output_norm.weight f32 [ 8192, 1, 1, 1 ]

tensor 2: output.weight q6_K [ 8192, 32000, 1, 1 ]

{

"dim": 8192,

"multiple_of": 4096,

"ffn_dim_multiplier": 1.3,

"n_heads": 64,

"n_kv_heads": 8,

"n_layers": 80,

"norm_eps": 1e-5,

"vocab_size": -1

}

// More comprehensive info

llm_load_print_meta: n_vocab = 32000

...

llm_load_print_meta: n_ctx_train = 4096

llm_load_print_meta: n_ctx = 512

llm_load_print_meta: n_embd = 8192

llm_load_print_meta: n_head = 64

llm_load_print_meta: n_head_kv = 8

llm_load_print_meta: n_layer = 80

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_gqa = 8

llm_load_print_meta: f_norm_eps = 1.0e-05

llm_load_print_meta: f_norm_rms_eps = 1.0e-05

llm_load_print_meta: n_ff = 28672

llm_load_print_meta: freq_base = 10000.0

llm_load_print_meta: freq_scale = 1

llm_load_print_meta: model type = 70B

llm_load_print_meta: model ftype = mostly Q4_0

llm_load_print_meta: model size = 68.98 B

llm_load_print_meta: general.name = LLaMA v2

llm_load_print_meta: BOS token = 1 '<s>'

llm_load_print_meta: EOS token = 2 '</s>'

llm_load_print_meta: UNK token = 0 '<unk>'

llm_load_print_meta: LF token = 13 '<0x0A>'

Why 32000 vocabulary size?

A good trade-off with regards to vocabulary size is around 32000 tokens for a single language vocabulary. This also has the benefit of fitting easily within 16 bits, which makes handling tokenized data easier in many cases.

7fff => 32767

Why not a larger vocabulary (e.g., character-level smaller tokens)?

Pros:

- Flexibility: Smaller tokens allow the model to generate and understand a wider range of words, including those it has never seen before, by combining smaller pieces.

- Memory Efficiency: Because tokens are smaller, the vocabulary size is typically reduced, which saves memory and computational resources in some respects.

- Language Agnosticism: Smaller tokens are often better at handling multiple languages or code, especially when those languages have different syntactic or grammatical structures.

- Handling Typos and Spelling Variants: Smaller tokens can be more robust to variations in spelling and potentially better at handling typos.

Cons:

- Computational Overhead: Smaller tokens mean that a given piece of text will be divided into more tokens, thereby increasing the computational cost for processing the text.

- Context Limitation: Due to the fixed maximum token limit, using smaller tokens may result in the model being able to consider less “context” in terms of the actual content.

- Ambiguity: Smaller tokens might lead to more ambiguous representations, making it harder for the model to understand the meaning of a sequence of tokens without sufficient context.

Why not make tokens larger (e.g., word-level, phrase-level)?

Pros:

- Computational Efficiency: Larger tokens reduce the number of tokens needed to represent a text, making processing more efficient in terms of computation.

- Better Context: Given a fixed maximum token count, larger tokens allow the model to consider a longer stretch of text, potentially leading to better understanding and generation capabilities.

- Reduced Ambiguity: Larger tokens might capture more nuanced meanings directly, reducing the ambiguity that arises from breaking down words into smaller pieces.

Cons:

- Vocabulary Size: Larger tokens typically require a larger vocabulary to capture the same range of text, which can be memory-intensive.

- Reduced Flexibility: Larger tokens may limit the model’s ability to generalize to unseen or rare words, as the entire token has to match something in the model’s vocabulary.

- Language Limitation: Larger tokens may not be as effective for languages with complex morphology or syntax, or for tasks that require understanding multiple languages.

- Handling Variability: Larger tokens can be sensitive to typos, variations in spelling, and other minor changes in the text.

Why not use phase-level tokens?— from GPT-4:

- Vocabulary Size: The number of possible phrases in a language is combinatorially large, making it impractical to maintain a vocabulary of all possible phrases. This could lead to an enormous vocabulary size, which would be computationally inefficient to manage and process.

- Generalization: One of the strengths of word- or subword-level tokens is the ability to generalize from the training data to unseen data. Phrase-level tokens could severely limit this capability because the model would only be able to generate or understand phrases it has been explicitly trained on.

- Flexibility and Compositionality: Language is highly flexible and compositional. The meaning of a sentence often depends not just on the individual words or phrases but also on their arrangement. Using phrase-level tokens could make it more difficult for the model to understand and generate a wide variety of sentences.

- Ambiguity: Phrases can have different meanings depending on context. Storing them as single tokens could make it difficult for the model to learn these nuances.

- Language-Specific Issues: Phrase-level tokenization would be highly language-dependent and might not generalize well across different languages, dialects, or even different domains of discourse within the same language.

- Data Sparsity: Due to the sheer number of possible phrases, many would appear rarely in the training data, making it difficult for the model to learn their nuances or to assign them meaningful embeddings.

- Memory and Computational Overheads: Storing phrase-level tokens would likely require more memory and computational power, not just because of the larger vocabulary but also due to the complexity of managing such a vocabulary efficiently.

- Token Limit: Large language models have a maximum token limit for each sequence they process. If phrase-level tokens are used, each token would represent a larger chunk of text, which could be problematic for tasks that require fine-grained analysis or generation.

For specialized tasks, phrase-level tokens might be useful and could be used in combination with word- or character-level tokens. However, for general-purpose language models, the challenges often outweigh the benefits.

Is there a better trade-off?

Consider a vocabulary in which each token is a phase, not a subword like what’s in GPT-4 and Llama. Although it may be less able to generalize and less robust against misspelling, it has a clear advantage for users:

It saves user money: since a prompt input/output will contain less tokens.

It is also less expensive for model inference, since it takes fewer autoregressive iterations to generate the same amount of texts.

There are several other tokenization methods:

- Unigram: at each stage in training, it calculates probability of each sub-word token and define a loss value which would result if each sub-word token was dropped. Then it cherry picks the tokens that result in least overall loss if dropped, essentially the tokens that add very little value to the set. Unigram is not used directly for any of the models in the transformers, but it’s used in conjunction with SentencePiece.

- Sentence Piece: While existing subword segmentation tools assume that the input is pre-tokenized into word sequences, SentencePiece can train subword models directly from raw sentences, which allows us to make a purely end-to-end and language independent system.

- AI21’s Jurassic models: the models utilize a unique 250,000-token vocabulary which is not only much larger than most existing vocabularies (5x or more), but also the first to include multi-word tokens such as expressions, phrases, and named entities. Because of this, Jurassic-1 needs fewer tokens to represent a given amount of text, thereby improving computational efficiency and reducing latency significantly.

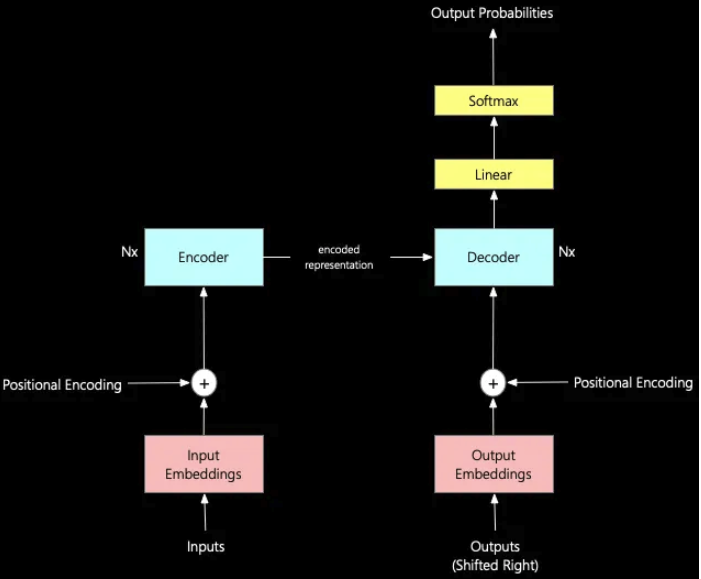

Transformer

The transformer architecture was introduced in the paper “Attention is all you need,” published in December 2017.

There are seven important components in transformer architecture.

- Inputs and Input Embeddings: The tokens entered by the user are considered inputs for the machine learning models. However, models only understand numbers, not text, so these inputs need to be converted into a numerical format called “input embeddings.” Input embeddings represent words as numbers, which machine learning models can then process. These embeddings are like a dictionary that helps the model understand the meaning of words by placing them in a mathematical space where similar words are located near each other. During training, the model learns how to create these embeddings so that similar vectors represent words with similar meanings.

- Positional Encoding: In natural language processing, the order of words in a sentence is crucial for determining the sentence’s meaning. However, traditional machine learning models, such as neural networks, do not inherently understand the order of inputs. To address this challenge, positional encoding can be used to encode the position of each word in the input sequence as a set of numbers. These numbers can be fed into the Transformer model, along with the input embeddings. By incorporating positional encoding into the Transformer architecture, GPT can more effectively understand the order of words in a sentence and generate grammatically correct and semantically meaningful output.

- Encoder: The encoder is part of the neural network that processes the input text and generates a series of hidden states that capture the meaning and context of the text. The encoder in GPT first tokenizes the input text into a sequence of tokens, such as individual words or sub-words. It then applies a series of self-attention layers; think of it as voodoo magic to generate a series of hidden states that represent the input text at different levels of abstraction. Multiple layers of the encoder are used in the transformer.

- Outputs (shifted right): During training, the decoder learns how to guess the next word by looking at the words before it. To do this, we move the output sequence over one spot to the right. That way, the decoder can only use the previous words. With GPT, we train it on a ton of text data, which helps it make sense when it writes. The biggest version, GPT-3, has 175 billion parameters and was trained on a massive amount of text data. Some text corpora we used to train GPT include the Common Crawl web corpus, the BooksCorpus dataset, and the English Wikipedia. These corpora have billions of words and sentences, so GPT has a lot of language data to learn from.

- Output Embeddings: Models can only understand numbers, not text, like input embeddings. So the output must be changed to a numerical format, known as “output embeddings.” Output embeddings are similar to input embeddings and go through positional encoding, which helps the model understand the order of words in a sentence. A loss function is used in machine learning, which measures the difference between a model’s predictions and the actual target values. The loss function is particularly important for complex models like GPT language models. The loss function adjusts some parts of the model to improve accuracy by reducing the difference between predictions and targets. The adjustment ultimately improves the model’s overall performance, which is great! Output embeddings are used during both training and inference in GPT. During training, they compute the loss function and update the model parameters. During inference, they generate the output text by mapping the model’s predicted probabilities of each token to the corresponding token in the vocabulary.

- Decoder: The positionally encoded input representation and the positionally encoded output embeddings go through the decoder. The decoder is part of the model that generates the output sequence based on the encoded input sequence. During training, the decoder learns how to guess the next word by looking at the words before it. The decoder in GPT generates natural language text based on the input sequence and the context learned by the encoder. Like an encoder, multiple layers of decoders are used in the transformer.

- Linear Layer and Softmax: After the decoder produces the output embeddings, the linear layer maps them to a higher-dimensional space. This step is necessary to transform the output embeddings into the original input space. Then, we use the softmax function to generate a probability distribution for each output token in the vocabulary, enabling us to generate output tokens with probabilities.

The Concept of Attention Mechanism

Attention is all you need.

The transformer architecture beats out other ones like Recurrent Neural networks (RNNs) or Long short-term memory (LSTMs) for natural language processing. The reason for the superior performance is mainly because of the “attention mechanism” concept that the transformer uses. The attention mechanism lets the model focus on different parts of the input sequence when making each output token.

- The RNNs don’t bother with an attention mechanism. Instead, they just plow through the input one word at a time. On the other hand, Transformers can handle the whole input simultaneously. Handling the entire input sequence, all at once, means Transformers do the job faster and can handle more complicated connections between words in the input sequence.

- LSTMs use a hidden state to remember what happened in the past. Still, they can struggle to learn when there are too many layers (a.k.a. the vanishing gradient problem). Meanwhile, Transformers perform better because they can look at all the input and output words simultaneously and figure out how they’re related (thanks to their fancy attention mechanism). Thanks to the attention mechanism, they’re really good at understanding long-term connections between words.

Let’s summarize:

- It lets the model selectively focus on different parts of the input sequence instead of treating everything the same way.

- It can capture relationships between inputs far away from each other in the sequence, which is helpful for natural language tasks.

- It needs fewer parameters to model long-term dependencies since it only has to pay attention to the inputs that matter.

- It’s really good at handling inputs of different lengths since it can adjust its attention based on the sequence length.